Hallucination is now the real AI bottleneck

Five charts to start your day

For $10 a month, or $100 a year, you support a simple mission: spread great data visualisation wherever it comes from. You help fund the work of finding, sourcing and explaining the charts that deserve a wider audience. And you back a publication built on generosity, transparency and the belief that better understanding makes a better world.CHART 1 • Hallucination is now the real AI bottleneck

This matters because the usefulness of AI now hinges less on how clever models sound and more on whether they can be trusted. As AI moves from demos into healthcare, law and finance, a single wrong answer is no longer a curiosity. It is a liability.

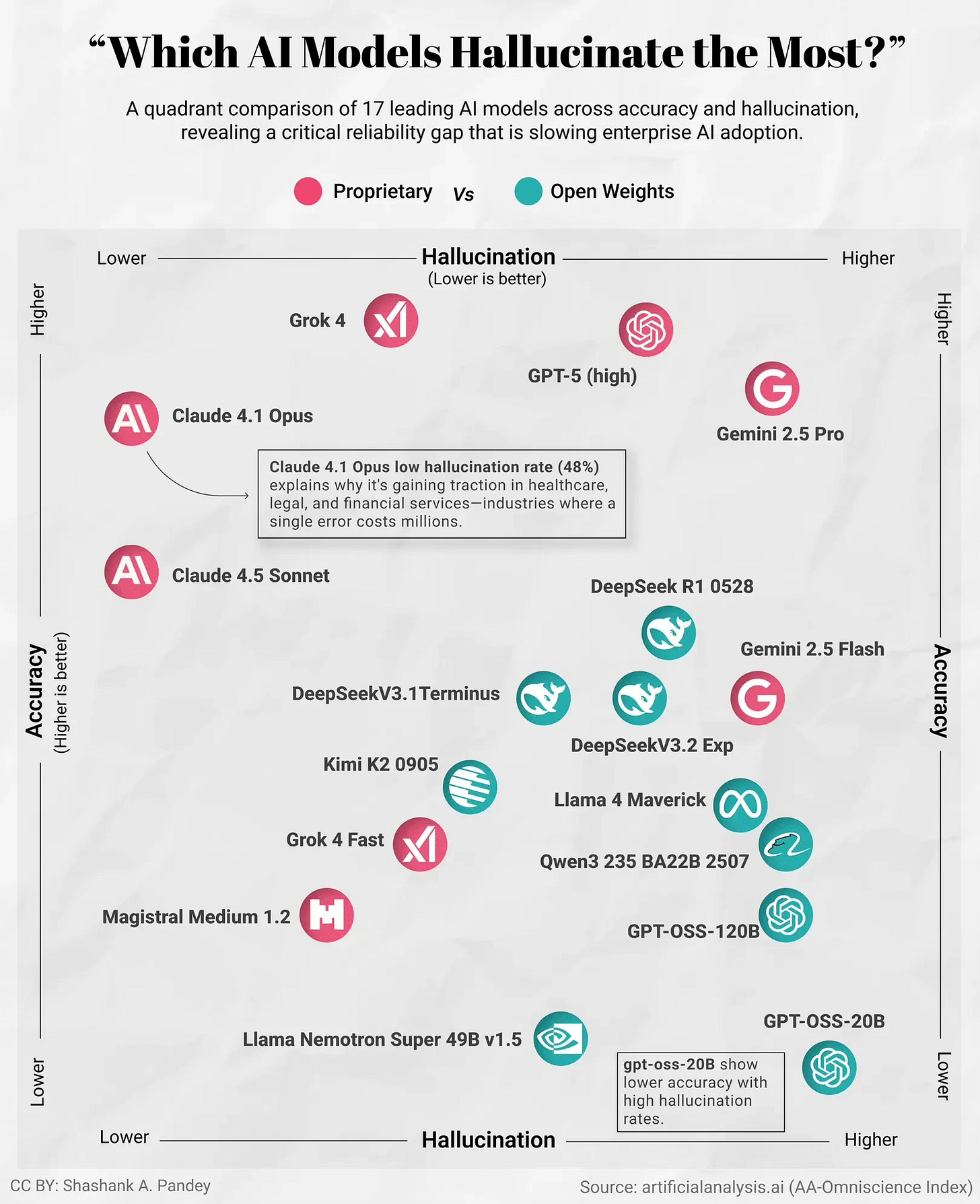

This chart compares leading AI models across two dimensions accuracy and hallucination. Lower hallucination is better. What stands out is how wide the gap has become. Some of the most capable models also hallucinate more often, while others sacrifice a bit of raw performance to stay grounded. Claude models cluster toward lower hallucination, which helps explain their traction in regulated industries. Several open weight models sit at the opposite extreme, offering flexibility and cost advantages but with far higher error risk.

The deeper point is that scale and fluency are no longer enough. Bigger models trained on more data do not automatically become more reliable. In fact, as systems grow more confident and articulate, their mistakes can become harder to spot. That creates a trust problem, not a technology problem. Until hallucination rates fall meaningfully, AI adoption in high stakes settings will remain cautious and uneven.

Source: Visual Capitalist

There is a wider lesson here about technology and power. Systems that speak fluently invite belief. That makes their failures more dangerous, not less. Progress, at this stage, is less about sounding smarter and more about knowing when not to answer.

I’ve got more charts that explore where AI is quietly succeeding, where it is stalling, and why reliability will matter more than scale from here. They’re for paid subscribers. Consider joining if you want the full edition and a clearer view of what actually limits AI now.